by Federico Placidi and Matteo Milani - U.S.O. Project, May 2011

Otto Laske is a composer internationally known for his work in computer-assisted score and sound composition. In the 1980’s, he co-founded and co-directed the New England Computer Arts Association, NEWCOMP, together with

Curtis Roads (1981-1991). In 1999, his 25-year long work as a cognitive musicologist was introduced to, and explained to, a larger public in Jerry Tabor’s 1999

Otto Laske: Navigating New Musical Horizons (Contributions to the Study of Music and Dance). The book contains a comprehensive bibliography of Otto’s compositions, poems, and musicological writings.

Otto Laske has always been seen as an innovator, both in theory and composition. After a career in music, he became a knowledge engineer in the 1980s and a psychologist in the 1990s. Since 1999, in addition to his compositional work, he has practiced as a developmental coach and management consultant based on a methodology created by him, called the Constructive Developmental Framework (

www.interdevelopmentals.org). This methodology for assessing individual’s developmental potential shares certain global structures with Laske’s cognitive musicology of the 1970s and 1980s, in that it is multi-dimensional, dialectic, and based on empirical research.

[Barry Truax with Curtis Roads and Otto Laske, Cambridge, MA, 1989 - courtesy Barry Truax]

" [...] a theory of music has to understand not musical results but rather the mental processes that lead to such results." - Otto Laske

"Looking back at 43 years of making electronic music, it's clear to me that ever since I began composing in 1964, the development of music technology strongly shaped my compositional ideas. The artistic task seemed to be to show that new technologies can indeed produce "art." At the same time, these technologies brought forth new compositional ideas not elaborated before. In short, a stark interdependency of compositional thinking and technological possibilities prevailed. When listening to my various compositional adventures today there is, for me, a certainty aesthetic unity that binds all of my pieces together. It will be up to historians (once they have become knowledgeable about the technology underlying these pieces) to judge them from a more balanced perspective than is perhaps possible today." - Otto Laske, January 2010

Computer Software Based Composition

My background is in both philosophy and music, not to speak of poetry. I studied with

Adorno in Frankfurt: a philosopher and composer who shaped my thinking for a decade (1956-1966) and also helped me to emigrate to the U.S. in 1966 in order to study computer music. He also made me aware of the Darmstadt Music Festival, at which I met Stockhausen,

Gottfried Michael Koenig, Ligeti and Boulez, among others. The first time I went to Darmstadt was the 1963: I was especially taken with Stockhausen as a teacher and with Pierre Boulez' notion of

orchestration virtuelle, by which he meant that a professional composition contains elements that are not immediately obvious or even hidden, but have to be there to make a rich composition come to life. This notion of Boulez’s has accompanied me all my life, and not only in music, as much as P. Klee’s

Das Bildernische Denken.

My main musical mentor, although not as a teacher of composition, is Gottfried Michael Koenig. I met Koenig in 1964 when he first presented Project 1 to colleagues. While his program was unfamiliar to me, I had previously studied with a German composition teacher (Konrad Lechner) who was very influenced by medieval music, as well as the works by Webern and Stravinsky. He had taught me something called micro-counterpoint by which he meant minutely working-out selected musical elements (such as, e.g., 10 rhythms, tones, or tone colors) and bringing them into the form of a cantus firmus on which to base a larger composition, under the intense influence of the ear.

When I listened to Koenig in his lecture at that time, I understood him to be talking about parametric counterpoint, counterpoint of parameters like pitch, duration, instrument color, register, volume and so forth, as Lechner had done. The difference was his use of computers for composition. What captivated my interest in computers was not the hardware, but the idea that compositions could be designed on the basis of contrapuntal ideas so that different parameter streams (lists) could be merged to create new sounds, either in ideal time (through notation), or in real time (electronically). In all of the computer programs of the sixties, such as those by Xenakis and Mathews, what interested me was expanding my contrapuntal, multi-dimensional way of working.

When I sit down to compose music using a program like Project 1 or

Kyma, I find of central interest the feedback loop between the frozen and the living knowledge that is engaged: the frozen knowledge embodied by the computer software, whether it is knowledge of an instrument, waveforms, envelopes or knowledge about deforming and sequencing visual images, and the living knowledge in the composer’s mind. In my writings, including in

Computer Music Journal, I always emphasized that a computer used in music (including its interface with the user) should have as much intelligence as possible, including the ability to learn from the user. I was always disappointed that this has been made possible to date by programmers only to a small extent. My notion regarding this was to permit the composer to build new “task environments”, a kind of artistic homesteads in which s(he) could re-use fruitful ideas and presets, or even understand his/her compositional process better.

I think that the new concepts engendered by computers are valid in many artistic fields. When I work with my painting program today, or make animations accompanied by music, I find much greater openness to the idea of having the computer program “know its user”. It seems to me that the visual programs I am using have a higher-level intelligence than present music programs, or so it seems to me. (I am not a live performer of music, where much of the available computer intelligence seems to be located these days.)

In music, I guess, I am an “old-fashioned” composer, in the sense that I typically work from numerical templates such as produced by Koenig’s Projekt 1. I refer to this way of working as “score synthesis” in contrast to “sound synthesis”, whether I am engaged in instrumental, vocal, or electronic composition. Algorithmic composition really never caught on in the US, except perhaps in Milton Babbitt’s work. As to Koenig’s Project 1, it seems I have remained the only composer who used it also in electronic composition, -- although composers like Barry Truax have, of course, been using “algorithmic composition” all their life, much influenced by Koenig’s work as holds for myself.

Score synthesis was a European idea stemming from Xenakis, Koenig and few others like L. Hiller in the US. My goal as composer over 45 years has been to bring score synthesis (the computation of score parameters) and sound synthesis (the computation of acoustic material based on “reading” score parameters) into balance with each other, giving equal attention to both. This meant that I had to always use at least two different programs (not originally made for working together), one for score synthesis and another for sound synthesis. And considering that the algorithmic paradigm of composition requires bringing together “score” and “sound” (whether in CSound or Kyma), the art of composition for me became that of marrying the right set of instruments to the right score by using my listening.

The Project 1 Experience: Interpretative Composition

In the 1960s and 1970s, there came into being very different compositional programs. Some made it easy to create numerical materials but required intensive interpretation by the composer, while others required elaborate inputs (such as Koenig’s Program 2) and their outputs could only either be accepted or rejected.

Koenig's Project 1, like Xenakis's ST/10, is of the former kind. It requires very little input and will give the composer a large amount of data to interpret, either for instruments or for electronic sound. I found that the Project 2 type of program didn't suit me as well as Project 1 because I love the freedom of interpreting data, often using the same score for an electronic as well as an instrumental composition (which probably nobody would hear or needs to know). However, I am still curious about the Project 2 type of program and may use it some time in the future after all.

Both programs show me that it is the composer’s mind that creates music, not the sound or the machine, because the composer can obviously use any kind of template, even – as Stockhausen used to say – a telephone book.

I called my work with Project 1 interpretative composition, because I was interpreting data generated by computer software according to guidelines programmed by a composer. I also refer to it as “rule” rather than “model” based composition, meaning that in each new composition I followed a different set of rules, some inherent in the program, others stipulated by me. It is the feedback loop between my own set of rules and the computer’s that interested me. As to the difference between following rules or models, I thought little of artists following others’ or their own compositions as models. I wanted to start from scratch each time, although I of course brought into being my own tradition over many years of composing.

As an abstract thinker, I was also of the persuasion that one should plan compositions “top down”, by stipulating rules for how a score or set of sounds ought to be created, and not bother about details other than in continued rehearsal of listening to the results, -- Berg’s “Durchhören”. It was a matter of what to control when, and not to control everything but to know what controls one could delegate to a computer slave.

Specifics of Koenig’s Project 1

To be specific, in Koenig’s Project 1 (created in 1967 and continuously refined til the 1990s), a composer works with 7 degrees of change for all parameters (such as pitch, entry delay, pitch, register, volume). Degree 1 represents constant change, while degree 7 stands for minimal change (redundancy), with degree 4 standing in for a compromise between the two.

Now imagine the fun to be able to plan, and carry out, a creative process in terms of the different parameters that need to come together to make a new composition! Should entry delay – the delay between subsequent sound entries – vary according to degree of change 1 or 4 or 7? If you chose 7, then what degree of change do other parameters such as pitch or volume need to follow? If you then in addition to using Project 1 stipulated your own interpretations of what “register 4” or “volume 6” is to stand for, you are in a creator’s paradise because you can model your rule stipulations to whatever strikes your fancy, keeping in mind the limits of the medium – instrumental, vocal, or electronic – you are writing in. Each movement of your composition will have it own unique “parametric signature” that is never repeated anywhere in your life’s output. And with regard to electronic music, you might arrive in a studio other than your own – e.g., at the GMEB in Bourges – and hear your score for the first time in your life -- with 2 weeks left to convert it to sound.

By using Project 1, I was able to plan the FORM of my compositions’ – the main esthetic concern of every composer – in the minutest detail by using a global top-down design based on parametric counterpoint. I was not composing with “tones” but at a meta-level, with“parameters” whose streams coalesced to create novel sound. And I could do so not only for sequencing scores (whose length I determined); I could also MERGE (mix) scores to my heart’s content. (This procedure is found in all of my electronic compositions after 1999).

Of course, the computer (luckily) could not help me sequence or merge different “sub-scores”, as I called them. I was challenged to do so by ear, “rehearsing” pieces like a conductor (without ever needing one). The computer couldn’t even guide me in designing instruments (e.g., in Kyma) that would be ideal for playing a particular score. I was free and obliged to do so myself (which shows that “algorithmic composition” is a very misleading term). And so, I often ended up “orchestrating” a particular score based on different sets of instruments (called “orchestras”), and then would mix different sonic renditions of the same score into a final complex result. It is here that I practiced what Boulez had called orchestration virtuelle because many fine details of a composition could easily be generated by superimposing different instruments (tone colors) slightly varied in their onset in time against each other.

Of course in instrumental composition I could only sequence, not mix, scores, although even here I could (theoretically) have decided to orchestrate the string section with one and the brass section with another score. Ultimately, electronic music won out in my production of music. I could easily produce a final score with 18, 24, or 36 voices per sound entry, by overlaying different scores played by different instruments, and I could vary the “parametrical depth” of the sound from second to second. The compositional freedom I enjoyed using Koenig’s Project 1 and

Scaletti’s Kyma was limitless.

I am speaking here of the most recent phase of my work of computer music programs during the first decade of the 21st century. The beginnings of this labor in the 1970s and 1980s were far less idyllic. For one thing, not having access to a computer running Project 1, I would produce my numerical scores manually, by “cutting and pasting” parameter lists from older score printouts I had retained and copied. This allowed me to design new scores in which the 7 degrees of change in Project 1 were quite different from previous scores, whether for instruments, voices, or tape. Then also, there was initially no “translator” for Project 1 scores into the DMX1000 or CSound or Kyma format, so that all of this work had to be done by hand. So it was a breakthrough in the early 21st century when Koenig provided me with a formatting of Project 1 scores that could actually be read by CSound or Kyma, respectively. No longer did one have to wait for a week, as in the 1970s, to hear a short piece one had programmed, by which time one had already forgotten the compositional idea input to the computer a week earlier.

The New England Computer Arts Association (NEWCOMP)

The 1980s were a heady time for “scandaliser le bourgeois” listening to music at Boston Symphony Hall. Curtis Roads was a very good friend of mine at that time, and for nearly a decade we worked together trying to put the focus on the production, rather than the consumption, of music. (It was only at the end of the 1980s that I could finally built my own studio, so that I could experiment with musical ideas any time I pleased, rather than having to travel to Vancouver, Bourges, or Ötwil am See to make a composition.)

I met Curtis (then editor of Computer Music Journal) in 1980 when he came to live in Cambridge, MA. After some talks we decided to form an association of composers, initially for presenting computer music concerts, later expanded to other computer arts, like computer poetry, computer dance and what we then call “visuals”. At that time I was married to a choreographer and I taught her to use Koenig's Project 1 in designing choreographies, which she did using parameter lists for determining “gestural events” for her dancers who collaborated to make a composition.

Curtis and I founded the New England Computer Arts Association in 1981 (which was renamed in 1984 into Computer Arts Association). During the time we worked together, Curtis and I gave about 65 concerts, planning every detail of them. Artists came from around the US to be presented by us. Curtis left NEWCOMP in 1985 and I carried on until 1991 when, not finding a worthy successor, NEWCOMP ceased to exist. We presented concerts not only in Cambridge (Massachusetts), but in also Europe (Warsaw, Stuttgart, Tbilisi). In addition, we sponsored an international computer music competition which became internally known as the NEWCOMP Music Competition.

At that time, both he and I were very sick and tired of the concert music scene in Boston, which was all about consuming music. We felt that what matters was producing, not consuming, music, and so we also presented composition courses for computer music beginners, and symposia for showcasing creative work. Our concert venue was a church in Cambridge, near Harvard University (where during 1992-1995 I would study developmental psychology).

NEWCOMP was a group of about 15 artists and composers which held regular meetings in my house, complete with a President, Vice-President, 2 Artistic Directors, and a Treasurer, -- all volunteers. We invited composer colleagues in the US and Europe – Koenig, Lansky, Ruzicka, and GMEB, and others – to be judges of the works submitted to the competition. NEWCOMP members came together to make the final selection of 3 winners. For ten years, NEWCOMP was the only association in the US that presented regular computer music and mixed computer arts concerts outside of academia. We “schlepped” loudspeakers, advertised, sold tickets, and in this way performed a lot of new music. It was a great pleasure.

Laske's work in Cognitive Musicology

I was always interested in what is knowledge, that is, epistemology. What does it mean to know, how does knowledge develop and work in the world?. As a result, the essential question I posed in my cognitive musicology between 1970 and 1995 (to be published in part in three volumes by Mellen Press by 2013) is "what is musical knowledge"?

As you know, musicologists have formulated hypotheses as to how Beethoven may have composed his string quartets, but they don't have enough data to really establish any sound theories about that. So that was the project that history handed to me. I was tired of the old musicology that I had studied in Frankfurt am Main. In my research after 1970, I was suggesting that, given the existence of computers, the time had come to branch out and study not only musical products – “compositions” – but the mental processes by which living computers brought their works into being. I was especially eager to understand the linkage between the mental process that led to a particular composition – carried out by using computer programs – and the work that resulted: how was musical form actually created? I was convinced that one could never derive the process from an existing work of a dead composer. Even old music was brought to life only through mental processes in the present, and so, in a way, there was no pre-existing music; it all occurred NOW. I also thought that conventional musicologists made too many illicit assumptions, called “interpretations”, that couldn’t be empirically proven and were largely arbitrary; and still think so.

Therefore, when upon Koenig’s (Godsend) invitation I worked in Utrecht between 1970 and 1975, inspired by what he called “composition theory”, I decided to use computer programs to work out empirical theories about how music is thought or “made”, whether in music analysis, conducting, composing, and listening. I rejected notation as a worthwhile medium and started working directly with electronic sound produced by the Institute of Sonology’s PDP-10 computer. Influenced by J. Piaget, the geneticist of knowledge, as well as N. Chomsky’s

Transformational Grammar and P. Schaeffer’s

Traité des Objets Musicaux, my goal was to understand the musical thinking of children.

" [...] At the Institute of Sonology, Gottfried Michael Koenig and Otto Laske and a host of really excellent teachers were formulating the digital future. That may sound overly dramatic, but they had this wonderful set of analog studios, with a lot of custom made equipment and two and four channel machines for recording it and banks of voltage control equipment that defied description. It was very, very complex. A long way from the Buchla and Moog synthesizers I’d been weened on at UBC. Stan Tempelaars was teaching modern psychoacoustics that he had gotten from Reiner Plomp, which I now realize was pretty cutting edge at the time. Koenig was teaching composition theory but also programming and macro assembly language for the PDP-15, almost as fast as he was learning it himself. And suddenly, for the first time, I found myself with the mini-computer; that’s what they were called, even though they took up one huge wall of a room. But they were single user, not mainframe computers like Max Mathews had. Although the only means of interaction was the teletype terminal, you could have real-time synthesis and interact with it as a composer rather than writing programmes. And I developed this thing called the POD System for interactive composition with synthesis, which was a top down type of approach." - Barry Truax

While in society computers were used to make profit, I looked at the computer as a machine that could strengthen (not replace!) the creative mind, thus working against the grain of technology. I felt artists could finally become independent of the many conventions than bind them in their work and in their performances, and simply satisfy their own criteria for what was “good art” (never mind the conductors who wouldn’t play their work). That was the political background.

Theoretically speaking, I was waking up to Artificial Intelligence as a means to “simulate” creative mental processes. For this reason, when I returned to the US in 1975, I applied for a grant to study with one of the luminaries of A.I., Nobel Prize winner Herbert Simon, at Carnegie-Mellon University, Pittsburgh (himself an excellent cello player). Together with A. Newell, another founder of A.I., Simon had created the first chess computer program that could beat a human player. He had also invented “protocol analysis”, a way of analyzing the intellectual moves of a human computer user engaging with a particular task such as chess and understanding spoken language.

So it was natural to wonder whether a computer program could not also “protocol”, or document, what children did with electronic sound compositionally (as I had been trying to understand in the Utrecht OBSERVER programs built together with B. Truax in FORTRAN), and whether they could not simulate, or at least intellectually support, musical composition, and not only for children. It was an idea that was in the air, so to speak.

As this shows, thinking about composition as a theorist and making music was very closely linked in my work. Not that composition was becoming a “science”, but rather that composers would do well to get out of their studio and sniff the air of science, as many composers began to do (e.g., James Tenney, not to speak of Xenakis and Koenig). I felt the composer needed to know as much as he/she could about computers and composition theory in order to understand his/her own creative process, and become more dynamic and flexible in using new processes rather than following old “models”, even their own.

From documenting children’s work in composition at the Instituut voor Sonologie, Utrecht (1970-75), I proceeded to simulating compositional processes by writing A. I. programs (1975-77) at Carnegie-Mellon. However, to do this was a very large undertaking, and I never managed to obtain the financial funds for working with others on this project which, finally, I had to give up to fully return to composition (1995).

EMF is bringing out a 2 CD set “Otto Laske: The Utrecht Years”, which features 9 music pieces I had composed in at the Institute of Sonology over 5 years.

Visual Music

[Lanesville seen by camera - Otto Laske]

My artistic life is far from over. I have often been told that my music is very visual and contains many visual cues. Therefore, in 2009 I began to think: composition is composition, why don’t I extend my compositional work into the visual domain. (I also have written a substantial body of poetry, both in German (1955-1968) and in English (1967-1995)), still unpublished.

In 2008, after having begun work in watercolour and oil, I discovered what today is called

visual music through

Dennis Miller, a fellow composer living near me, and one of the pioneers of the new medium. (I always meet the right people at the right time, it seems.) Visual Music is a discipline still in its infancy, but has its roots in the 1920's and 1930's, when artists like Oskar Fischinger, Germany, began to experiment with abstract films that were called “absolute film” since they were without narrative and storyline, and rather simply focused on (often geometrical) shapes and colors. The pioneers of visual music had the vision that it was possible, or should be possible, to bring abstract painting in the sense of Kandinsky and Klee to film or video, and link it to music (instrumental music at first, and later electronic music).

In my present work with Studio Artist and Cinema 4D -- the first a program for digital painting and the second for animation -- I have again taken up the practice of using two different programs not initially meant to work with one another. But at least they can “talk” to each other now, which was not the case with early music programs. And so I am gradually learning to go back and forth between these 2 programs, not to mention that I also need to use a sound processor such as Sound Forge, a movie making program such as Vegas Movie Studio, and bring them all together to produce a visual music video.

For the time being, I have produced a gallery of images that will be accessible on

www.ottolaske.com in the near future. Even for an experienced composer like myself, learning and using visual programs presents a steep learning curve. I am therefore putting my poetry and music on hold in order to became a digital painter and animator. I have given myself two or three years to learn these programs before I can turn out anything that would satisfy my artistic standards.

Again, the computer is the "leading voice" that challenges me as an artist to bring together music and image after a lifetime of composition. I feel very fortunate to be able to do this at my age (75), additional years permitting.

[works by Otto Laske @

silenteditions.com]

[

www.cdemusic.org]

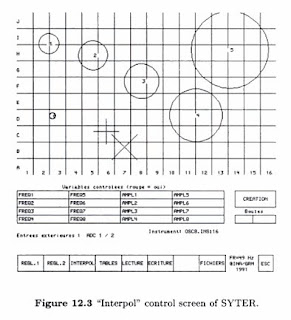

"Interpol" control screen of SYTER

"Interpol" control screen of SYTER